For my Innovation and Professional Development Project I made a lot of research about stereoscopic 3D (S3D). This post will be about all the interesting information I found about the topic.

First let’s talk about why I did research about S3D. First I think that S3D is the next step in gaming graphics. Additional depth makes a huge difference. When you have played the first time an S3D game it is a different and better experience. It is not the same difference as between 2D and 3D games but I kind of reminds of that. No wonder that especially Sony and Nintendo push this technique. However, S3D has often the downside of a smaller resolution or lower frame rate compared to the 3D version and has still to struggle with bad displays. And of course you have to wear this stupid glasses for 3D TVs. For the Nintendo 3DS you don’t have to wear glasses but still you need to hold the 3DS steady that you can get the correct S3D experience.

OK enough of an introduction for this topic let’s get a little bit more technical. For S3D we need at the end two pictures, one for the right eye and one for the left. That is the only way how we can simulate depth. But how can we render two correct images. To be able to answer this question I had to do some research.

First when I did research about the topic I found presentation slides from Sébastien Schertenleib, who works at Sony Computer Entertainment Europe (SCEE). He published on the SCEE website three presentations about S3D with the PS3. The presentations are PlayStation3 Making Stereoscopic 3D Games, Optimization for Making Stereoscopic 3D Games on PS3 and Making Stereoscopic 3D Games on PlayStation 3. A lot of the content is the same, but there are some slides in each presentation that are different.

It is shown in the slides what S3D content the PS3 can show and how the S3D images are interpreted from the PS3. PS3 can show 1080p videos at 24Hz and for games the console is theoretically capable of rendering 720p at 60 Hz. This is the data that can be outputted from the PS3. The image output for 720p S3D images is the resolution of 1280×1470. 720 pixels are for the left eye image, 30 pixels are for a gap filled with black colour and 720 pixels are for the right eye.

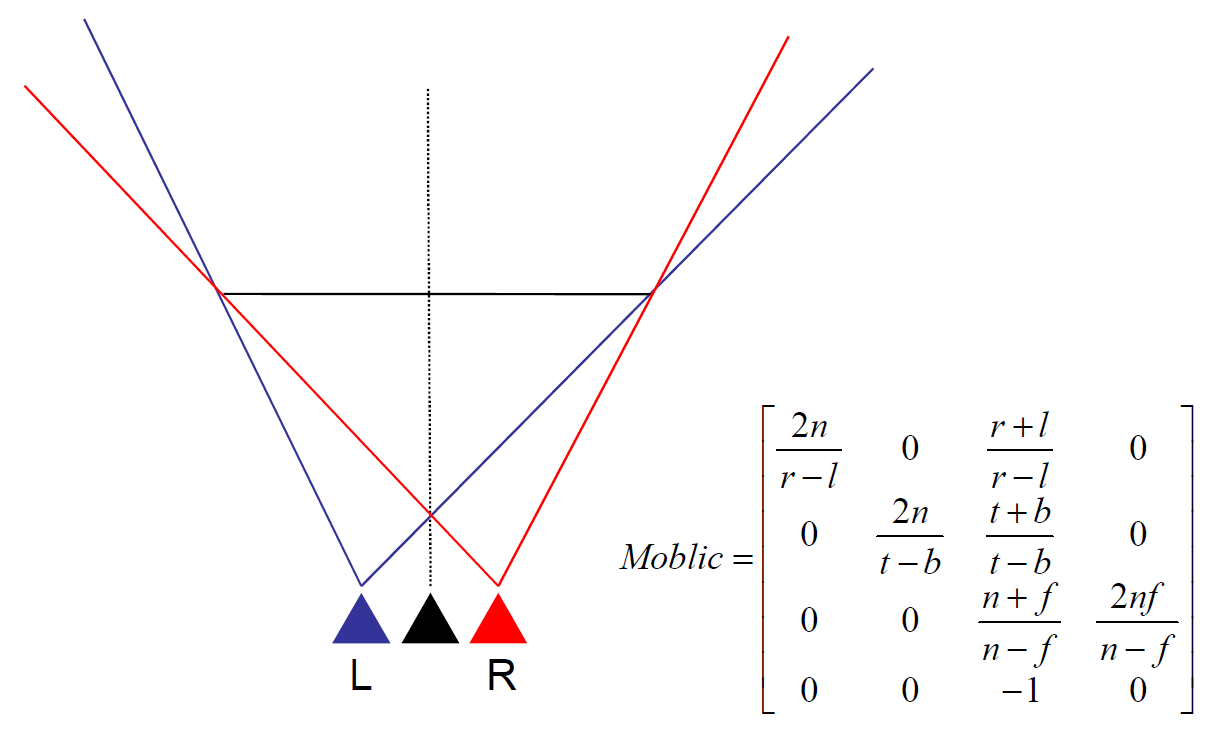

OK but this still does not answer how we can render two different images. One solution for that is a simple offset of the view-frustum. We have a normal view-frustum we would use to render for a camera. We use that view-frustum and translate it for the left image to the left and for the right image to the right. The problem with this approach is that we have portions of an image that are only visible to a single eye. The result of that are failures in the image. An alternative is Toe-In. The left and right eye view-frustum are rotated to the middle after the offset operation. The problem with this approach is that convergence is not parallel with the screen which causes vertical parallax deviations. A better solution is to use Parallel Projections. The image that I have copied from the slides of Sébastien Schertenleib shows how Parallel Projection is done. Each camera has its own asymmetric projection matrix. Here the smallest portion are visible for the left or the right eye only, which makes it most comfortable technique for the viewer.

Sébastien Schertenleib wrote also in his slides about accommodation and convergence. Accommodation is how the eyes are focused on the screen. Convergence is the focus of the 3D Object in S3D. If you focus on one spot of the screen and see an object with different depth values, the accommodation is always the same. Convergence is different dependent on the depth of the object.

What is also important is parallax. Positive parallax is when the object is behind the screen. The image separation for depth into the screen has to be positive. If the image separation is null then the object is on the screen. A negative image separation leads to a negative parallax. In this case the object seems to be in front of the screen.

But what is the cost of rendering two images. We have two render two images instead of one. According to this it should be twice the calculation power you need for one image. This is true if S3D rendering is not optimized. Especially for the PS3 and Xbox 360 it is important to use as less calculation power as possible to get an S3D effect. The CPU overhead for S3D is that the scene has to be traversed for two cameras. Additional graphics commands for the second image are needed. GPU wise the scene has to be rendered twice. One solution is to use half the resolution and upscale it afterwards. An alternative is the technique Reprojection. With this technique a single image is rendered and the second image is generated with the help of the depth map. Reprojection doesn’t work for transparency and reflections and artefacts can appear. However, it reduces the impact to the performance and allows therefore a higher resolution.

Another very interesting part of the presentations was about depth perception. The possible perceived depth someone can have with S3D is dependent on the distance to the screen and on the size of the screen. This is a very important fact. For example in a cinema the perceived depth is different dependent on where you sit.

Very important for the creation of S3D content is two know the comfortable viewing areas of S3D. The blue part of comfortable S3D graphic shows which areas are OK for the viewer and which areas should not be used. Overall extreme negative and positive parallax should not be avoided. Our eyes and brain start to realize that they are being tricked.

Now to the most important part of his presentations. It was the idea how you could use the PlayStation Eye for a special S3D effect. It is called “Ortho-Stereo”. With the help of head-tracking the view-frustum is adjusted to the position of the viewer. Result is an asymmetric field of view. The graphic Ortho-Stereo shows how and adjusted view-frustum will look like. This is something I want to try out. Especially this feature will be interesting to test inside the HIVE. With a big screen the ortho-stereo effect could be more impressive than on a small screen.

S3D has its limitations. It is not possible perceived depth outside of the screen is limited and dependent on the distance to the screen. Not all areas of the S3D view frustum should be used because they are not comfortable for the viewer. However, S3D can improve the perceived depth and visual quality of a game if it is done right. Especially targeting is a problem with S3D because the perceived depth is dependent on the viewer’s distance and the screen size. These are two parameters a developer doesn’t know and is different for every gaming setup. Here is the point where PlayStation Eye can be helpful. With face tracking the possibility is given to track the player’s position and adjust the S3D effect accordingly. One step further goes Ortho-Stereo. It uses the player’s position to adjust the view-frustum. That this effect can be impressive has shown Johnny Chung Lee with a head-tracking demo he used the Wii Remote for. The combination of this effect with two rendered images could improve it even further.